| Model | Success Rate ↑ | Search Efficiency ↑ | Task Completeness ↑ | Success Rate for SubTasks ↑ | |||

|---|---|---|---|---|---|---|---|

| Search | Manipulate | Transport | Composite | ||||

| General-purpose VLMs | |||||||

| Qwen2.5-VL-7B-Instruct | 12.38% | 10.87% | 27.53% | 6.45% | 23.55% | 7.56% | 0.95% |

| Qwen2-VL-7B-Instruct | 14.79% | 11.97% | 38.67% | 23.33% | 25.50% | 2.82% | 0.00% |

| Qwen2.5-VL-72B-Instruct | 31.75% | 22.61% | 50.62% | 52.14% | 38.89% | 21.90% | 0.00% |

| Qwen2-VL-72B-Instruct | 39.00% | 28.88% | 54.56% | 50.00% | 52.36% | 33.19% | 0.00% |

| Claude 3.5-Sonnet | 45.35% | 28.05% | 64.12% | 54.25% | 50.51% | 51.22% | 3.84% |

| Qwen-VL-Max | 49.81% | 36.28% | 68.39% | 63.87% | 63.21% | 45.16% | 1.90% |

| GPT-4o | 66.67% | 41.68% | 79.07% | 69.03% | 79.26% | 71.95% | 14.42% |

| Visual Reasoning Models | |||||||

| QVQ-72B-Preview | 7.54% | 6.39% | 36.33% | 4.35% | 7.50% | 10.53% | 0.00% |

| Kimi-K1.5† | 46.00% | - | - | - | - | - | - |

| GPT-o3-mini | 56.55% | 26.93% | 67.41% | 78.57% | 59.32% | 66.67% | 0.00% |

| Gemini-2.0 Flash Thinking | 56.74% | 43.01% | 71.70% | 71.05% | 75.60% | 40.67% | 8.89% |

| Claude-3.7-Sonnet-thinking | 67.70% | 37.95% | 78.63% | 69.12% | 75.88% | 71.94% | 13.79% |

| GPT-o1 | 71.73% | 43.06% | 82.49% | 78.42% | 79.10% | 67.36% | 13.16% |

| Embodied-Interactor-7B (ours-1st) | 25.46% | 24.75% | 53.67% | 30.97% | 27.09% | 29.20% | 3.81% |

| Embodied-Explorer-7B (ours-2nd) | 65.39% | 46.25% | 77.73% | 60.00% | 75.92% | 72.24% | 26.67% |

| Embodied-Reasoner-2B (ours-3rd) | 59.09% | 40.05% | 72.04% | 64.52% | 68.56% | 63.20% | 14.29% |

| Embodied-Reasoner-3B (ours-3rd) | 73.67% | 50.88% | 83.34% | 65.16% | 86.96% | 77.60% | 39.05% |

| Embodied-Reasoner-7B (ours-3rd) | 80.96% | 55.07% | 86.30% | 65.16% | 93.31% | 87.20% | 54.29% |

We compare the performance of Embodied-Reasoner against advanced VLMs and visual reasoning models. Success Rate (%) measures whether a task is successfully completed. Search Efficiency (%) evaluates task efficiency—more steps indicate lower efficiency. Task Completeness (%) computes the proportion of predicted actions that belong to the set of key actions.

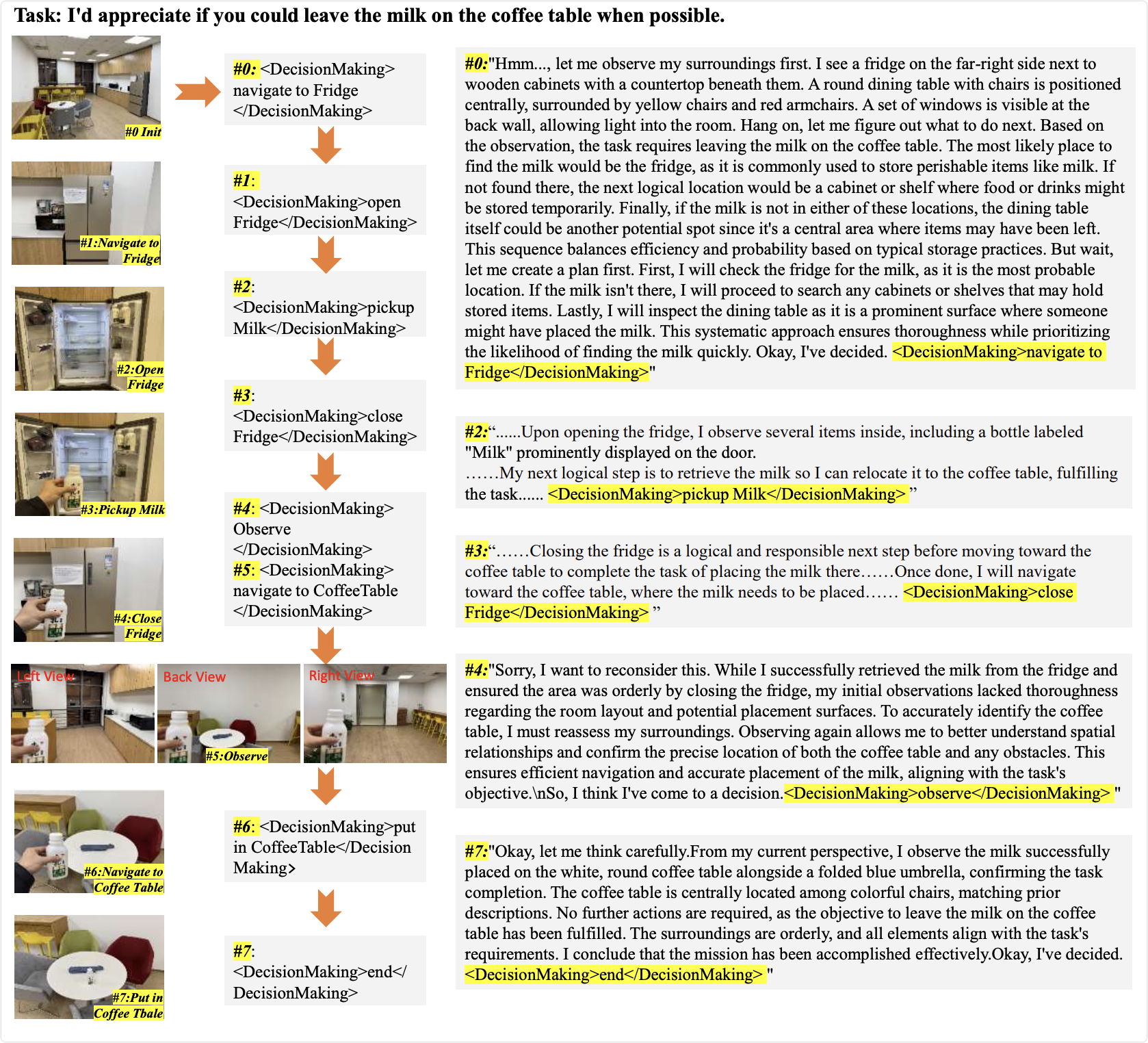

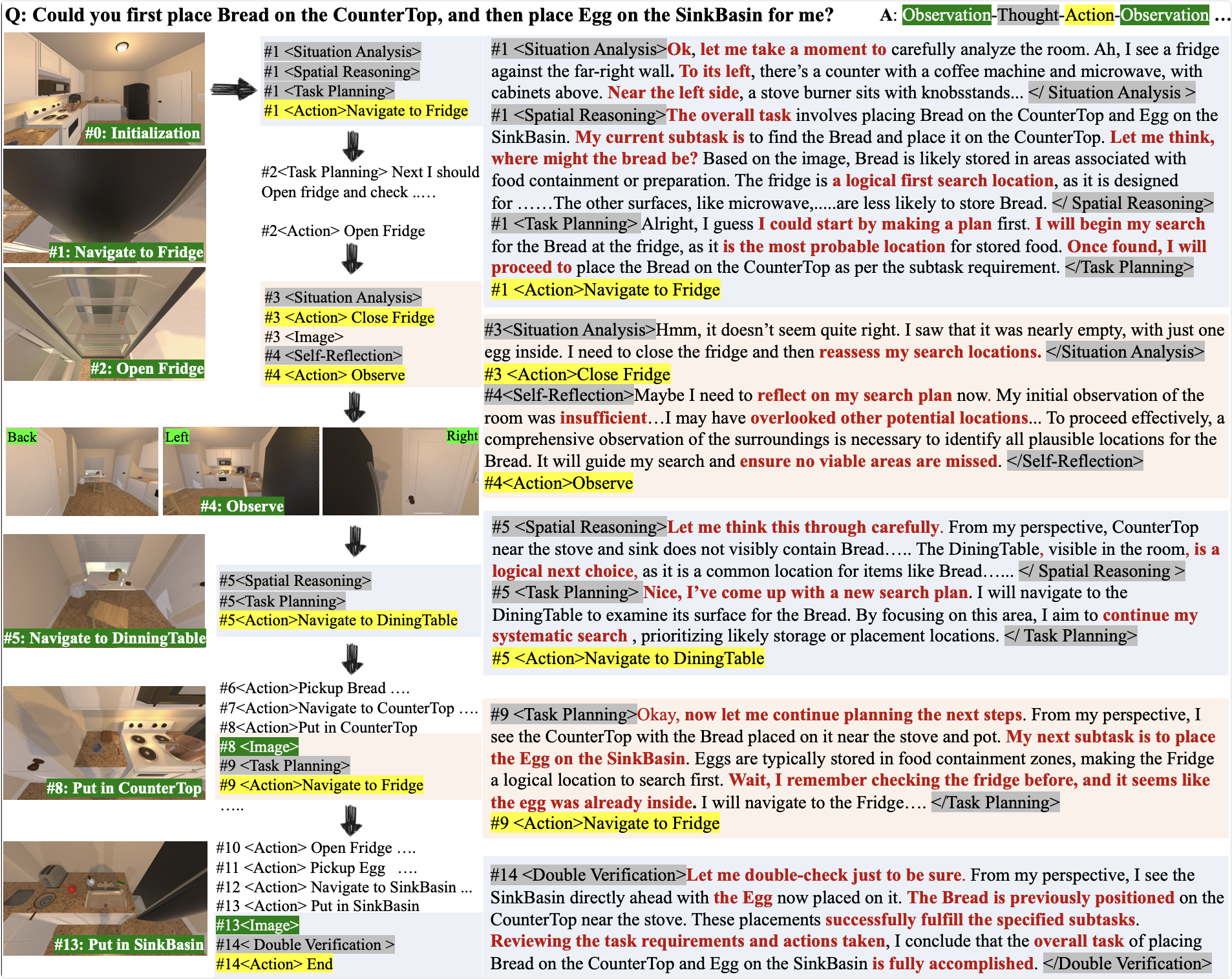

An Example Interactive Trajectory of Embodied-Reasoner

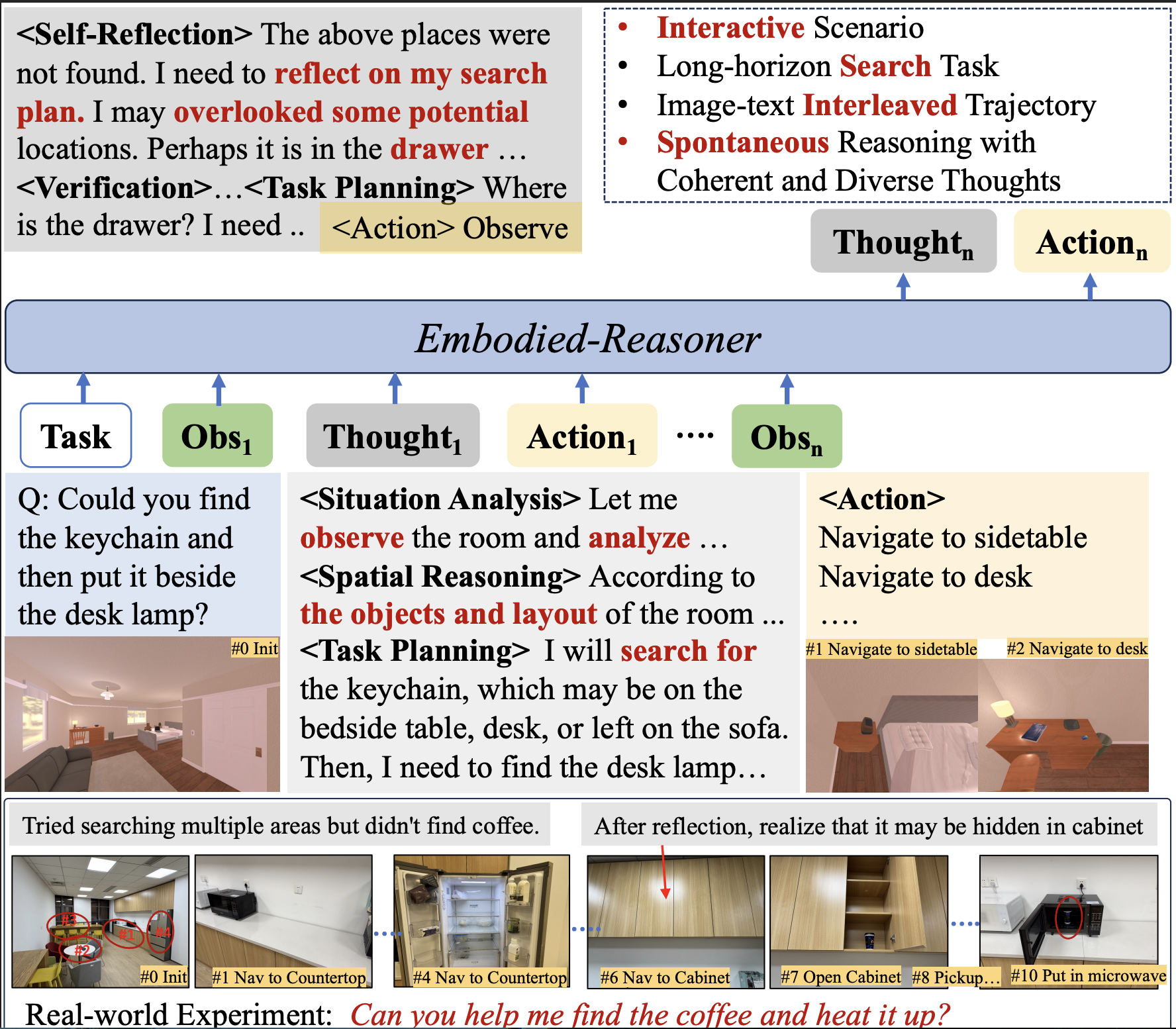

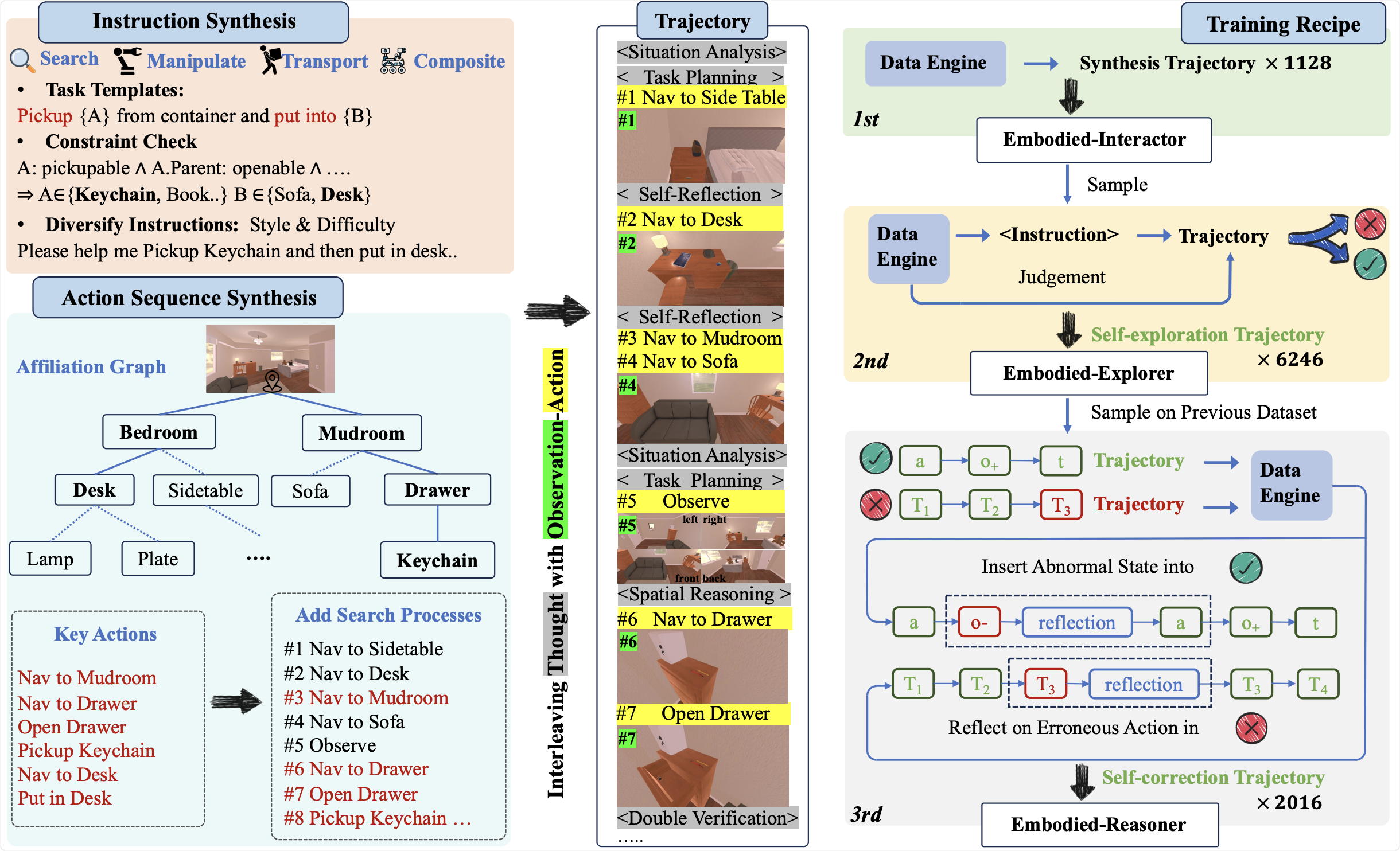

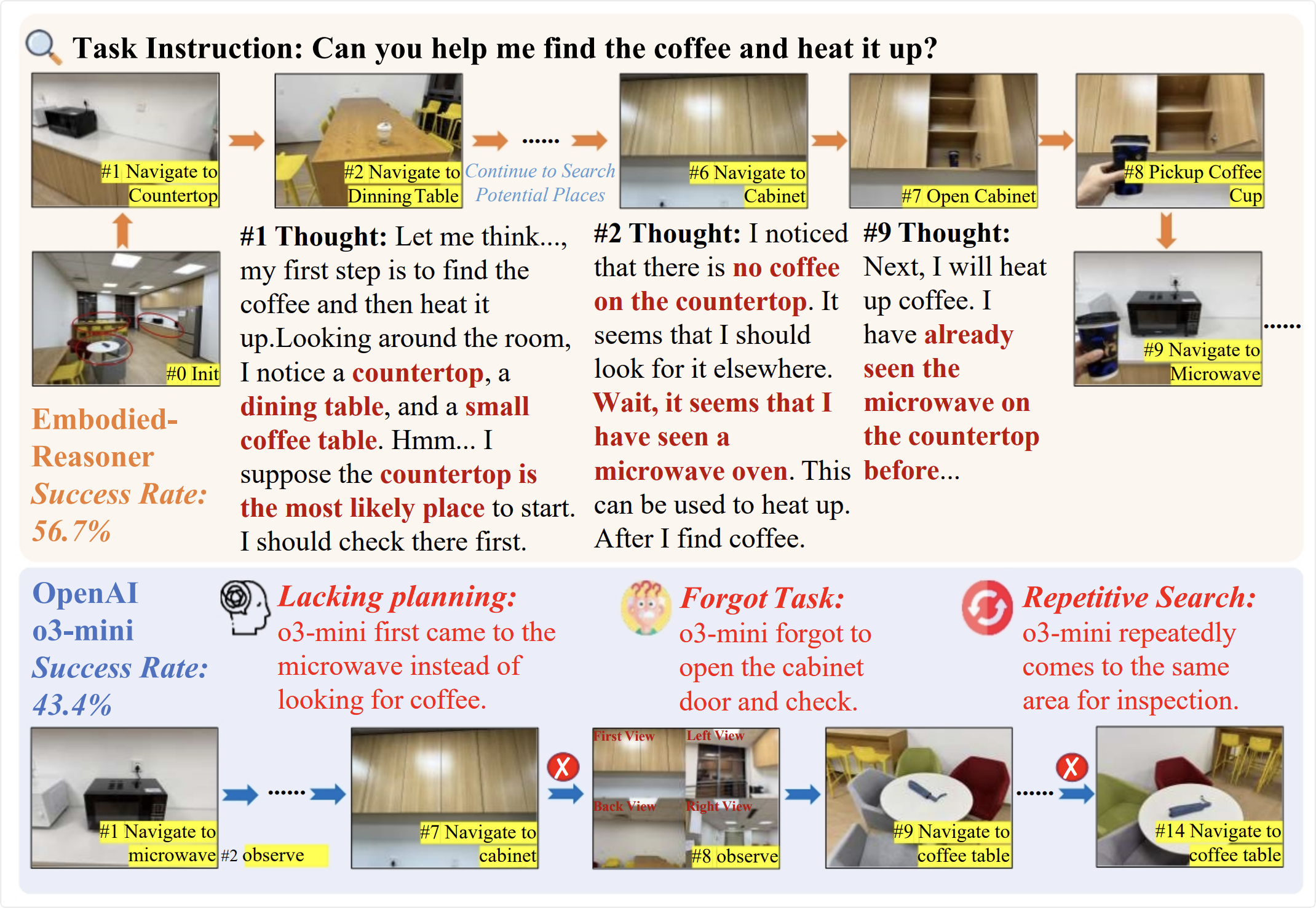

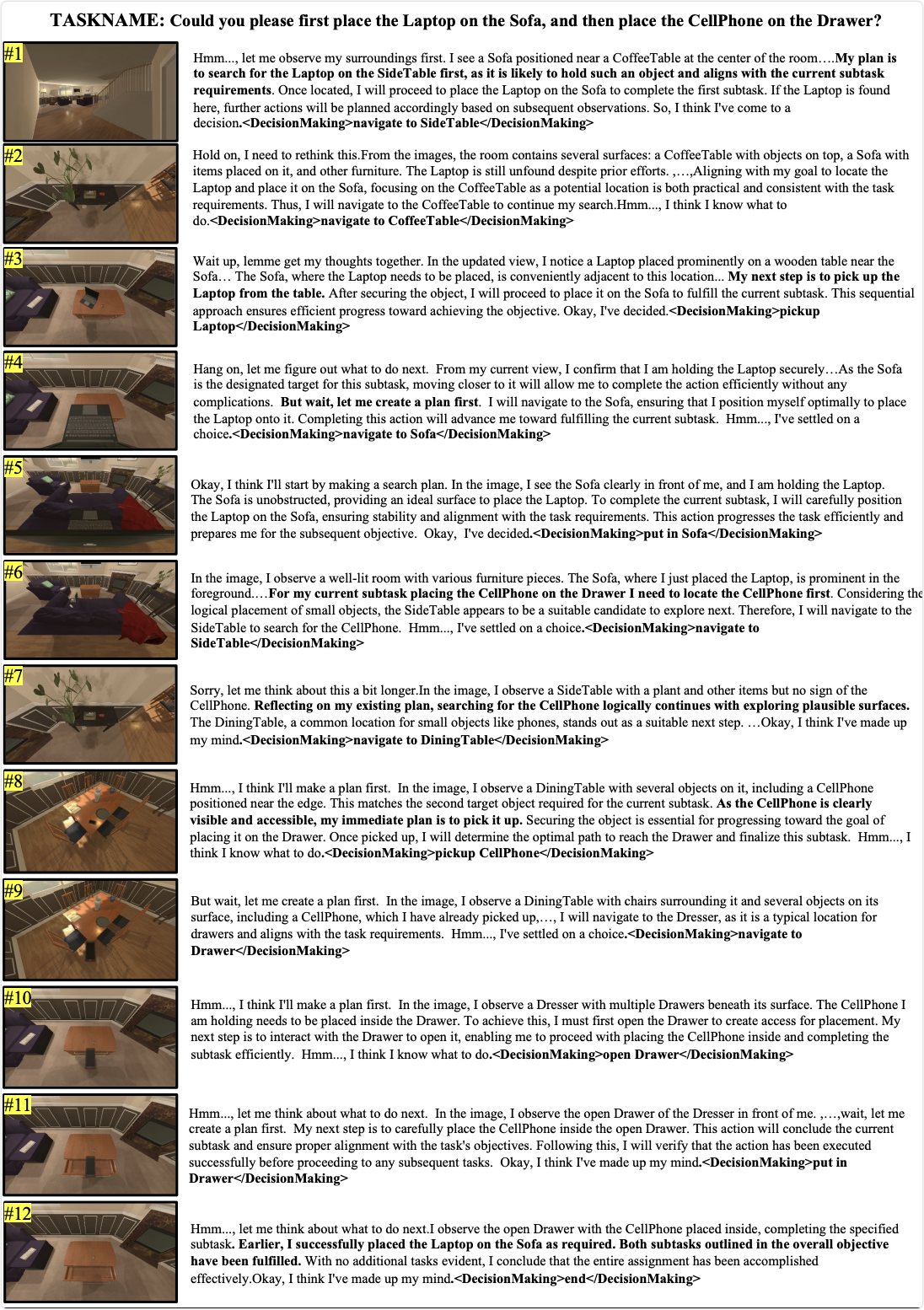

An Evaluation Interaction Trajectory of Embodied-Reasoner

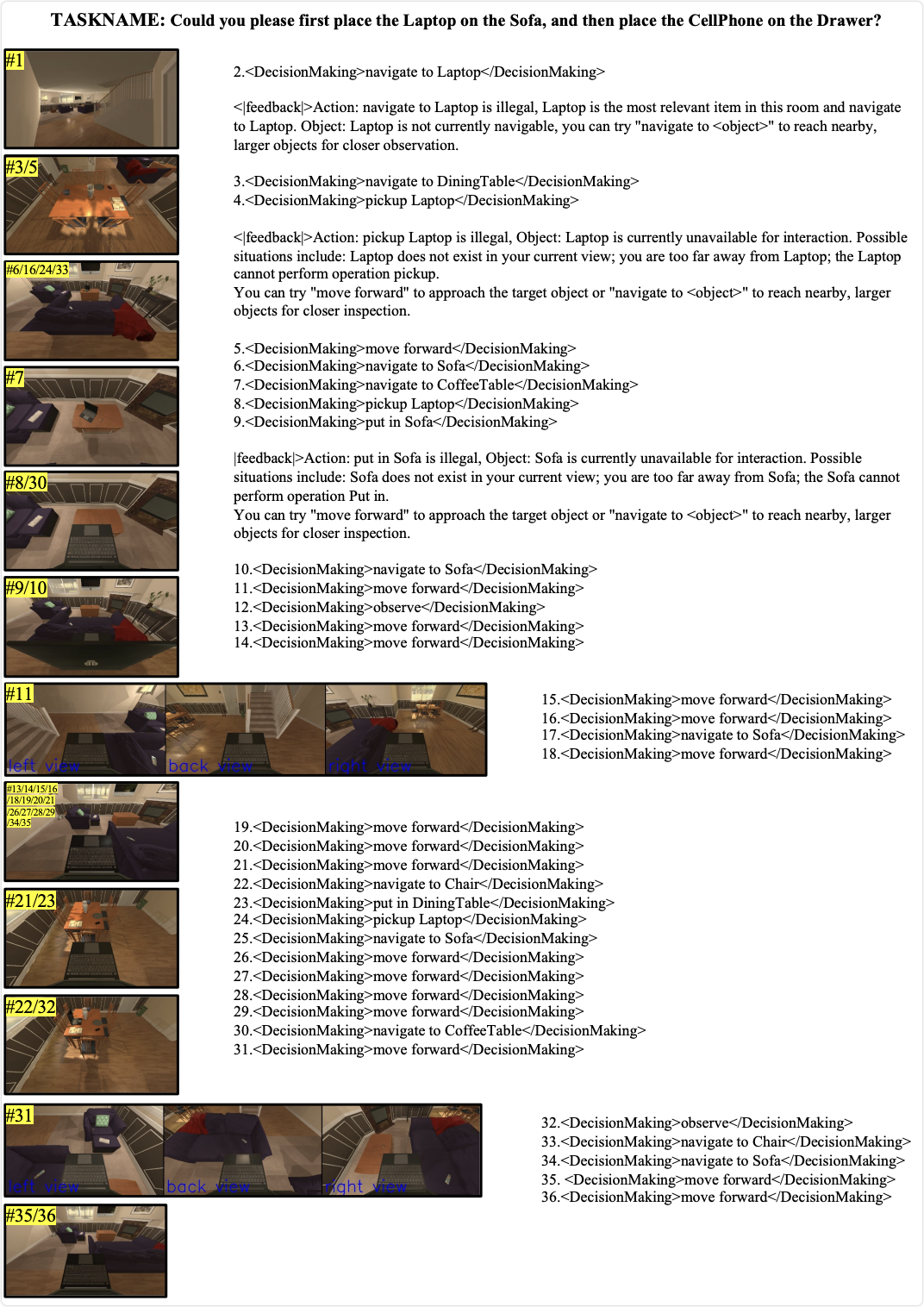

An Evaluation Interaction Trajectory of GPT-o1

A Real-World Interaction Trajectory of Embodied-Reasoner